A GenZ-er Trying to Conceptualize an AGI Future

Posted on 04 January, 2024 / 13 min readForeword: As I am not an expert in any field, please take everything here cum grano salis. Actually, cum magnus sacculum salis ☺

Ever since OpenAI's ChatGPT 3 caught the world by storm, people can't stop talking about AGI. Many influential figures (e.g. Geoffrey Hinton, Elon Musk) are not confident AGI will not go out of control while others like Yann LeCun, Fei-Fei Li, and Anthony Ng appear less worried by the possibility. (Why am I starting with this? Well, let's just say all my podcasts have been discussing this lol.) While we've been interacting with AI for decades (e.g. the keyboards on iPhones expand and/or shrink the keys of certain letters under the hood to match their users' habits), it's only been recently that AI has begun to arguably develop broad human-like understanding.

Taking it as a given that artificial intelligence will continue exponentially improving due to factors such as zero-shot learning, AutoML, etc., not to mention that the global economy's resources are invested in improving it, I've tried my pen here as to how the economy, society, and human cultures will change as a consequence. Note: if you just wanna talk about models, research, and cool papers, see the appendix at the end.

Living in Five Nights of Freddy's?

Is it just me or are people unable to resist creating anthropomorphic robots equipped with what soon will be AGI? This is just another part of the in-progress internet of things (IoT).

I wonder will these robots become commonplace as pedestrians, clerks, flight attendants, security guards, etc.? Will they become army soldiers, pilots, librarians...? Will they have rights and be independent because of their ability to think independently and pass the Turing test or will they be treated like appliances and electronics we own today, purchased by every household like cars and computers--or will it be a mix of both? How many robots will run companies, schools, and other institutions in place of humans. This touches on a big debate: what I like to call the copilot vs autopilot debate. I'm not going to write an essay about this, but while I think we're definitely still in the copilot stage right now, repetitive tasks seem like they can and will be autopiloted barring contingencies. Anthropomorphic robots is a concept that has been explored via science fiction for a while, with mixes of these different scenarios.

I also don't want to exclude robots made in the forms of other organisms or even those of completely new forms even though anthropomorphism is something humanity is often guilty of. After all, most tasks would probably be better handled by a machine whose mechanical design is tailored for the task at hand, rather than one with a humanoid morphology (unless that were beneficial). After all, in many settings, take this restaurant for example, you can order online and have the food mechanically delivered to your table by an arguably non-humanoid robot (although it does still seem a bit anthropomorphic to me). Some restaurants don't even use robots but just use conveyer belts.

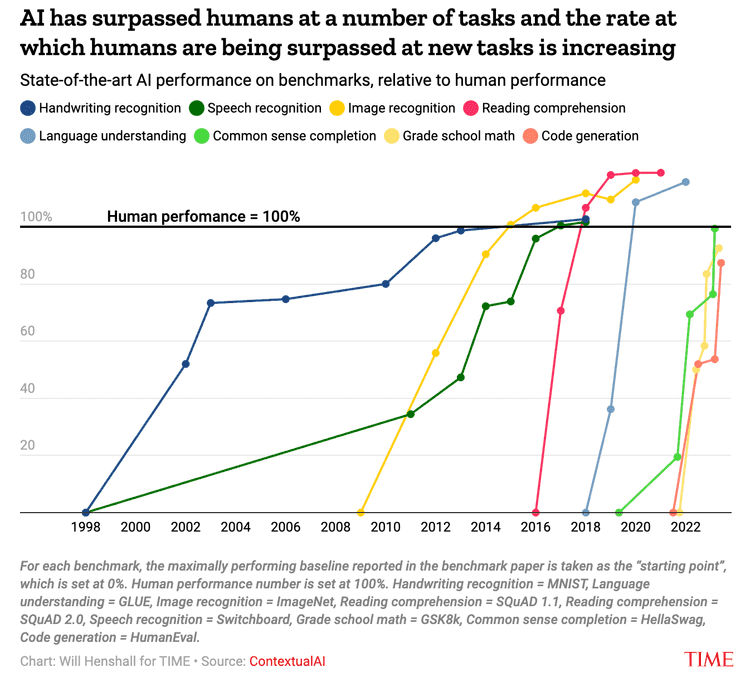

Will these anthropomorphic robots be just for show then? (Sorry for writing the word anthropomorphic so many times.) Or will they specialize in interactions where a human-like morphology feels more authentic? Perhaps they will act as therapists, romantic partners, volunteers at assisted living facilities, etc. after all, if they have AGI they could very well do nearly anything a human could do, from writing this blog post to coding this website to understanding human emotions. See the below chart:

Anthropomorphic robots or not, automation is a trend that has been going on for centuries (lol, this is not an academic paper so I'm not going to cite every concrete detail I write, but if you find this blog post uncredible because of that, I'm sorry, and at a future date I shall see if I can formalize this with Wikipedia-esque citations) and is only going to be sped up with AI's help. I wonder how many decades it will take for the above-mentioned jobs to become fully automated--or even for every job to be--and whether there will be significant resistance from people who fear their jobs will be replaced (well, there already is), perhaps similar to the Luddite movement (why is this the only comparison my podcasts are using). Or, more likely, will AI simply increase our efficiency and allow us to operate on higher-level tasks (its always hard to distinguish wishful thinking from optimism)? Or, will all tasks be done by a super-intelligent AI while the rest of society finally has no actual need to work anymore? Will the utopian ideal of people being able to pursue whatever passions they want without worrying about having to economically support themselves be realized (whoa, I wonder what an economist would have to say on this topic), or will we live in a capitalist dystopia where mega-corporations that control AI first get society's infrastructure to rely on them and then charge exorbitant rates owing to an oligopolistic setup, leading to increased economic inequality and reification of a substantially less malleable socioeconomic pyramid? Or, as some people contend, will AI become some sort of widely democratized technology that will not be dominated by any one or two (or five) big corporations but rather be differentiated into multitudinous subclasses with distinct leaders in each? This third argument feels the most palatable and is quite popular. I just think that we cannot not contemplate the other alternatives since it kind of feels like a lot of people are trying to find ways to justify/cope with imminent societal change effected by AI rather than taking a purely objective stance. I think any argument has to accept that a significant portion of tasks previously done by humans will be done by AI since that is already the case.

Anyhow, as a CS major, I'm not the most qualified to get into those economic weeds, so I'll ponder about the other nascent technological advances AI is helping to manifest.

Precision Medicine

Computer vision is dramatically improving the quality of medical imaging and thereby yielding higher quality medical data (I do research in this area and have seen some of the cool stuff coming out, it's really promising). NLP is helping to speed up research by making papers more accessible and machine learning tools are well-adapted for finding meaningful correlations in large data such as genomes, proteomes, etc.

Anyhow, with so much data, so much AI, and so many budding biotechnologies, it's not that difficult to think that medicine will be much more effective and personalized in the days to come. Once again, ideas from science-fiction are becoming all the more imminent. I could describe a theoretical picture of what medicine might look like but I will refrain since I imagine the reader is more than capable.

I will venture to say, though, that the fact that Med-PaLM-2 and other LLMs can pass USMLE exams indicates that AI will have the capacity to help doctors handle routine patient checkups, and, will likely even be able to do so from the comfort of one's home. There is much more to say here that is left unsaid.

Even More Personalized Marketing

Along with personalized search engines, medicine, etc. there will also be the logical enhancement of marketing based on personal information. AI is already used in personalized ads, but I expect it will become more personalized. Some people might like this, but I know for many this is annoying.

Self-Driving Cars and Better Public Transport?

Will public transport in the US ever become good? It's already largely automated. Will ridesharing become the new paradigm? Or, will things largely stay as they are, with public transport used in large cities while cars (soon to be autonomous) remain the go-to choice in areas of suburban sprawl? There is also talk of flying cars, underground tunnels, spaceflights instead of plane flights, etc. It seems probable to me that there will be a mix of all these in the future. There's still some more to go before the vast majority of people will be comfortable taking autonomous Uber/Lyft rides though.

A More Automated School?

Many people I know including myself find it more convenient to watch recorded lectures. If made the norm, this means that if content doesn't change much, a professor could reuse their lectures from previous years and save a lot of time, as Professor David Smallberg does for CS 32 at UCLA. (If you are a professor, please consider doing this, we really appreciate it.) Imagine AI became smart enough to also act as a TA and a professor in answering questions on online forums and grading homework and tests. Then college professors could supervise many many more courses without having to put in much effort into any individual one. It would mean that universities would need to hire fewer faculty to teach an equivalent number of courses. This principle generalizes to nearly all industries, meaning that the number of jobs will stay the same but output will increase due to increased efficiency (if demand has not yet achieved equilibrium) or that the number of jobs will decrease and output will stay constant (or somewhere in between). I'm sorry for being so meticulous and explaining the obvious, note: this attention-to-detail translates to how I code in low-level languages like C because otherwise my programs crash.

For majors like CS, where the the demand for classes far exceeds university resources (at least at UCLA lol), AI-powered grading for free-response questions may be the missing key to allowing for increased class sizes.

Privacy

Min-D-Vis and other AI-powered models reliant on EEG data have the capacity to turn thoughts to text (yes, there are also implants that do even better). A person's thought space, previously thought unintrudable, may become less private in the future. With EEGs already being used to exonerate people falsely accused of crimes, development in this area is beginning. This technology may help people with nonverbal communication. Companies like Neuralink were already working on deciphering the thought space for a long time, but AI might just be the key to cracking this egg.

Not to mention online security and breaking RSA algorithms using quantum. That's another apocalypse I haven't thought enough about.

Not to mention AI-powered inferences based on gene data and other biometrics that might make leaking DNA information (c.f. 23andMe's data leakage, although it seems from what I read that base pairs were not leaked) even more hazardous.

AI-Plagiarism, Deepfakes, Disinformation

As Deepfakes improve with cutting edge facial recognition and voice mimicry technologies already widespread and text-to-image and text-to-video generation rapidly becoming better, it's an interesting question as to how people will navigate the online world. Essays, articles, speeches, photographs, social media posts, etc. can and/or will all be easily generated by an AI. A proliferation of such content could inundate platforms with inauthentic, misleading, and/or nonhelpful information. While forums such as StackOverflow prohibit AI generated responses for now, I expect soon they will begin allowing it, albeit perhaps with moderation. With screenwriters already on strike, authors such as George R. R. Martin and newspapers such as the New York Times suing OpenAI for plaigiarism, one wonders where the end of this conflict will lead. I hope it doesn't interfere with elections, but it seems very possible. Even without AI, there is a lot of fake stuff injected on the web. AI seems to just facilitate that.

There seems to be a lot of opportunism going on right now around the AI-hype.

There are AIs that can identify AI-generated content, but there are also AIs that can make AI-generated content unidentifiable. Even art--with DALLE, Midjourney, Pika, and so many other technologies excelling at generative artwork--how will people differentiate themselves? Even if using copyrighted materials or PII in training deep learning models becomes illegal, how can such laws be enforced if generated output is different? Currently AI's skill level in the arts is nowhere near a specialist, but one wonders if that will soon change. Will AI simply raise the bar or will it set one so high that no human can surpass it? It can already surpass human accuracy in a lot of tasks, e.g. AlphaGoZero.

Copiloting

GitHub Copilot, Amazon CodeWhisperer, or even just simple ChatGPT are quite good at solving LeetCode problems. Will LeetCode continue be a reliable metric for software engineering performance? Moreover, GPT is quite good at generating skeletons of web apps and mobile apps, doing data analysis and machine learning, etc. Currently, though, it is still just a copilot--its output is still sketchy at times. Will we transcend this phase to the point it becomes an autopilot? (I believe it will at least become much better.) Many say that experts in all fields will still be needed to ensure the accuracy of an LLM's output. But what about all the people who aren't those experts (for instance us college students)? What will they do? Simply build things faster using AI? Sometimes I feel like we're all suffering from information overload and the more products that high tech comes out with, the more I feel like I'm trapped in Satoru Gojo's domain expansion (iykyk).

Side note: statistical data science/theory and machine learning is a growing field some might consider a subset of computer science (others would prefer to separate them). That's cool.

Back to the Brain

As I alluded to above, AI is enabling us to penetrate the thought space like never before. If it truly can decode thoughts, products like Neuralink will be revolutionary for treating quadriplegia, other spinal cord injuries, perhaps eventually Alzheimer's disease, etc. In addition to these medical applications, I wonder if it will soon also be used for other purposes such as giving humans super-computer brainpower, allowing them to automatically connect to an AI database of information or at least perform high-throughput computations or truly telepathic communication.

People Who Don't Like AI

The benefits of AI seem to be harnessed the fastest by those who embrace it first. However, not everyone is techy or interested in exploiting AI. How soon will it be when not embracing AI will no longer be an option for many people, kind of like not using a computer? As a foolish kid who likes to make random guesses, I guess maybe 8 years? It's still funny how I can remember when flip phones were a thing. It's funny that JavaScript only came out in 1995, TensorFlow in 2015, etc. Will people be able to adapt when the technology changes so fast? Does this have any ageism implications?

AI Takeover

What if someone uses quantum computing to train a superintelligent AI that takes over humanity? One step back: can we do anything about that? Also, why would AI do that? Maybe that's anthropomorphic psychological projection. Others have written much deeper and better stuff on this subject.

Unforeseen Obstacles?

Again, many futuristic AI-powered technologies rely heavily on the availability of compute infrastructures. What is the limiting factor of AGI? On a high level, is it our current understanding of quantum physics and math?

Calming Down and Not Getting Overwhelmed

I don't know about you, but sometimes talking about AI seems overwhelming. Maybe its because I get into speculating about stuff like the topics above.

Also, there are so many additional things facing human society and the globe: racism, climate change, wars, etc. It can be difficult to think about all these things and not feel overwhelmed. I don't have an answer to this but I just wanted to acknowledge this.

General Implications

As AI continues to take over certain tasks once done by humans, one theoretical possibility is that people who don't want to interact with other live people will be fully capable of living the majority of their lives interfacing solely with robots, chatbots, deepfakes, etc. (Ok, maybe that's very far-fetched but I think it's an interesting concept not enough people have discussed yet.) A question arises as to whether such interactions are less authentic, and whether they will make impressionable impacts on human development that thereby alter social and cultural norms to a point that society 50 years from now will evolve both on the outside and the inside, as it has been already doing for millennia.

After all this rambling, if you're still reading, I hope you were able to find something to wrestle with in my writing. If not, I guess it seems like AI is just another variable catalyzing change in humanity's history much like electricity or the internet; apocalyptic scenarios of a malevolent AGI like The Terminator's Skynet might be possible, but, regardless, history is moving forward full speed ahead.

Note: yes, there have been many laws passed to regulate AI. We will see if they are completely effective.

Appendix A: Trending AI Tech Stuff

Note: I just started this section and plan to populate it more later.

There's a lot of talk lately around retrieval augmented generation (RAG). RAG is a way to provide relevant information to an LLM about a topic that it wasn't trained on so that it can answer ad hoc questions. This is super exciting, especially when working with private company databases or niche APIs.

It's so cool that vision transformers (ViT) are more accurate in computer vision than CNNs. I guess images and language aren't that different! Multimodal models are the future.

Model inversion is a scary thing when AI models are trained on PII. Its so cool that we're using zero-knowledge proofs to securely transmit information in federated learning workflows. Topics around adversarial AI like fast gradient sign method are similarly interesting. Cryptography definitely is going to be important!

Even though LLMs require tons of compute, reinforcement learning (RL) is something most can do on their laptops. Beware video games, super NPCs are coming!

Appendix B: Bad AI Jokes

Why don't RLHF models ever get lost?

Because they're always fine-tuning their path!

Why was the LLM afraid of the internet?

Because it had too many hyperlinks and not enough hyperparameters!

Why did the Transformer model refuse to work weekends?

Because it was afraid of overfitting its social life!

Why was the NLP model so bad at parties?

Because it could only handle short tokens and always misunderstood the context!

Why did the RNN break up with its partner?

It kept bringing up things from the past!

Why don't LSTMs make good comedians?

Because they tend to remember the punchline long after the joke is over!

Why was the CNN so good at parties?

Because it could recognize a face in any crowd!

Why doesn't a GAN ever play poker?

Because every time it bluffs, the discriminator calls its bluff!

Why don't VAEs make good detectives?

They're always blurring the details!

Why don't GNNs make good secret agents?

Because they're always connected and can't keep anything discrete!